Sora App (AKA “Sora 2”) launched last week to much fanfare. And by fanfare I mean people downloaded it and used it like they do any new shiny thing. And mostly what users did was generate copyrighted materials like it was Christmas (or whichever holiday you celebrate where you get whatever you want). There was Pikachu doing unthinkable violence, Jenna Ortega (ie. Wednesday Addams) being deepfaked without permission, or South Park doing any and everything you wanted. My feed had people generating videos straight from the latest Dune movies. The amazing thing beyond what Sora could generate was that a lot of these referenced copyrighted materials are hidden behind pay walls like Netflix or need to be purchased to have access to … the only way OpenAI would have access to them is by torrenting them illegally. Which, as we know from the AI court decisions, is one of the few things they are legally liable for and can be sued for damages on. Yes, there is their BS “copyright holders just need to opt-out” but we’ve blogged about this before — that is admitting to stealing outright and then saying those ripped off need to find the theft and try to claw it back before too much of the stuff has already been stolen. Even with new guardrails up that block a lot of the previously available copyright, I was able (today) to generate Drew Carey’s voice, image, and likeness; Pat Sajak’s voice, image, and likeness; and Bob Ross’s voice, image, and likeness. I’m guessing none of them was asked permission to allow OpenAI to let any and everyone deepfake them.

OpenAI and Sora have repeatedly claimed that their goal is to maximize the “public benefit”. Please, anyone, tell us how a deepfake generator that creates AI Slop benefits the public. We know OpenAi will soon monetize Sora, so it benefits them, for sure. But it is high time we call BS on the BS. Tech Bros have argued that US AI companies need to ignore copyright law because they need to “beat China” … what exactly is OpenAI or the other AI companies beating China to exactly? Being able to rip off creatives the most? Create the best deepfakes? Seriously, what specific thing does AI need to win at for national security purposes? Because it has been obvious to us, like YouTube when it launched, that stealing IP is a means to a company making money by stealing from others. As simple as that.

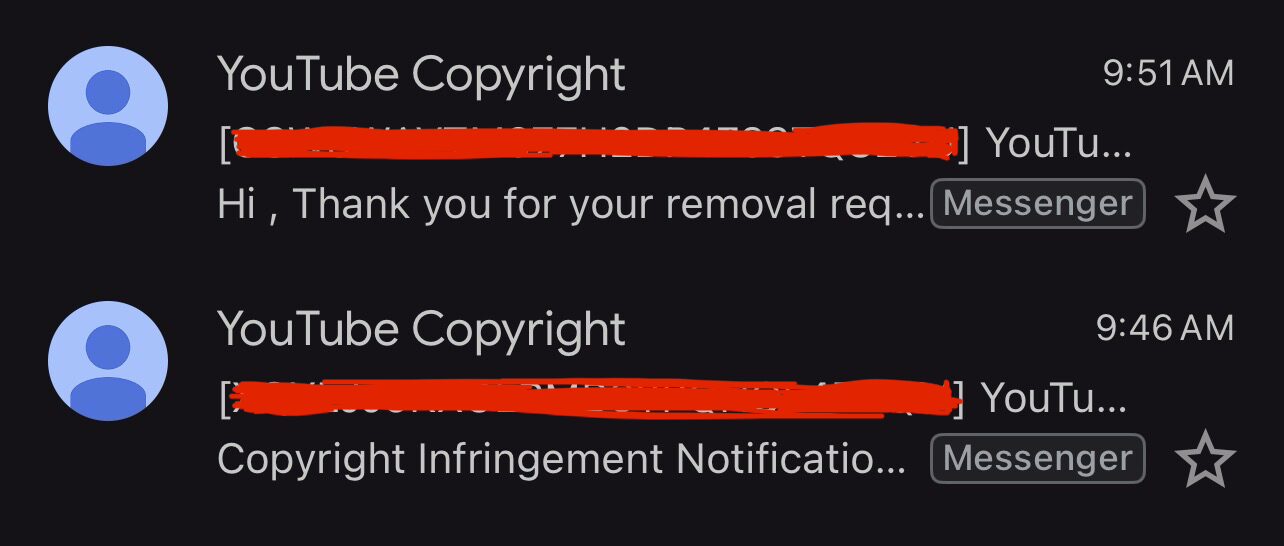

Stay tuned because Copyright Slap will soon be entering the Deepfake Removals game, and we aim to help users get deepfakes taken down as swiftly and efficiently as we do online piracy. Copyright Slap has public benefit, and we’d argue probably a lot more than OpenAI does.